A Hierarchical Deep Reinforcement Learning Framework with High Efficiency and Generalization for Fast and Safe Navigation

Image credit: Unsplash

Image credit: Unsplash

Abstract

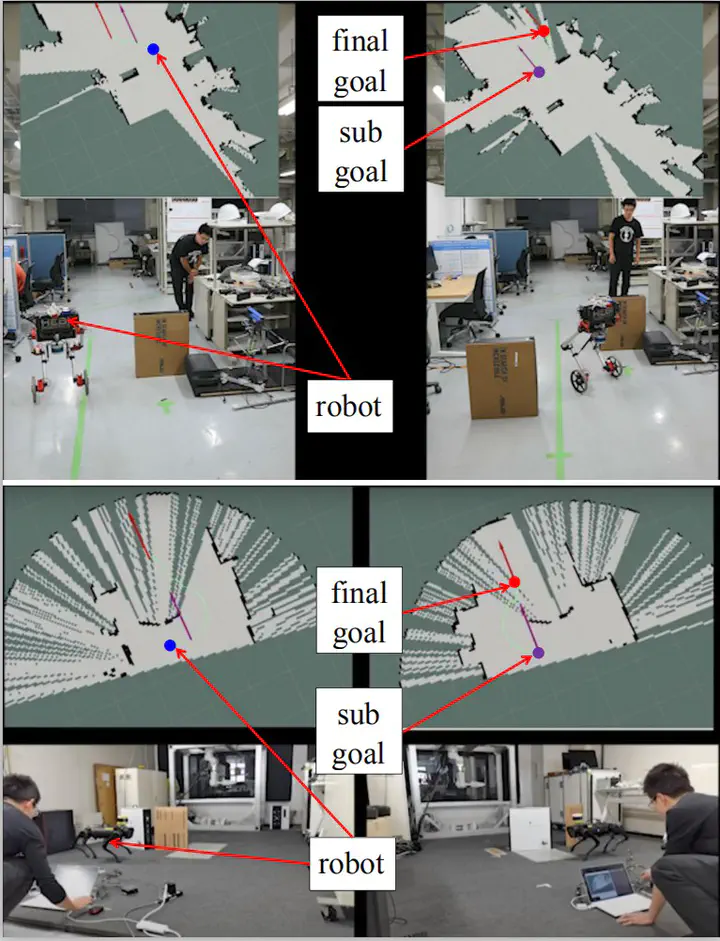

We present a hierarchical deep reinforcement learning (DRL) framework with prominent sampling efficiency and sim-to-real transfer ability for fast and safe navigation, the low-level DRL policy enables the robot to move towards the target position and keep a safe distance to obstacles simultaneously; the high-level DRL policy is supplemented to further enhance the navigation safety. We select a waypoint located on the path from the robot to the ultimate goal as the sub-goal to reduce the state space and avoid sparse reward. Moreover, the path is generated based on either a local or a global map, which can significantly improve the sampling efficiency, safety, and generalization ability of the proposed DRL framework. Additionally, a target-directed representation for the action space can be derived based on the sub-goal to improve the motion efficiency and reduce the action space. In order to demonstrate the eminent sampling efficiency, motion performance, obstacle avoidance, and generalization ability of the proposed framework, we implement sufficient comparisons with the non-learning navigation methods and DRL-based baselines,